Actually, we were early adopters of Player/Stage, the ROS predecessor. Back then, vendors were hesitant to join in the Robotic Operating System buzz. Generic protocols, software packages, and visualization tools were something that each company would have developed internally, again and again. Linux was considered good for the academy and hackers, and Windows was competing to get a foot into the robotic market with Windows Robotic Studio.

Back then, making a driver work would usually mean compiling your own Linux Kernel, reading through some obscure forums by the light of a candle, or as my lab professor would say “Here be dragons.” By the time you could see real image data streaming through your C++ code, your laptop graphic display driver would usually stop working due to incompatible dependencies and Ubuntu would crash on boot.

By now, more than a decade has passed. ROS has come into the picture, making data visualization, SLAM algorithms, and navigating robots something that anyone with some free time and a step-by-step tutorial can follow through, test, and customize. Robotic Sensor / Platform vendors themselves are now accepting ROS and releasing git repositories with ready-made ROS nodes — nodes that they themselves used to test and develop the hardware.

A Shift in Robotics Development

This gives the impression that the basic growing pains of robotic software development are now long gone. Buying off-the-shelf components, and building your own robot has never been easier, and that is before we even talk about simulation tools and the cloud.

Here is a weird fact, most of the robots created today are still closed boxes; the OS cannot be updated, and they are not ROS-based. iRobot, for example, discussed its intention in 2019 to move away from a proprietary operating system to a ROS-based one, (source) and is currently using ROS only for testing its infrastructure in AWS Robomaker (source). This is just one example. If you take a look at the robots around you, most of the issues they face will never be solvable and their behavior will not change greatly. Today’s ROS-based robots will be replaced by a whole new OS with a full-blown new ROS release in a new robot. But what happened to the old robot and the old code? Remember when phones behaved that way? Before FOTA (Firmware Over The Air). Before App stores. Before Android.

Here is another fun fact: every new robot out in the wild has a blog. There is a YouTuber out there uploading a review for it, and he is comparing it to all the other vendor’s models and algorithms. Why are the companies not doing that to start with? Whole setups are hard to break down and reassemble. Program flows are not transferable. We know, in 2012 we released some basic behavior tree decision-making code to ROS. It took until ROS2 to see a behavior engine first used as a ROS standard component. Have you ever tried to reconfigure move-base between robots, set up TF’s, and reconfigure thresholds for negative and positive obstacles when the sensor type or position changed? Updating its simulative model? Making sure its dependencies are met between the various ROS versions provided by the vendor?

Sounds like we are back to square one, doesn’t it?

Breaking the Cycle

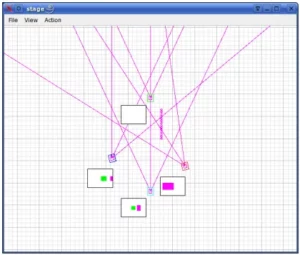

Cogniteam’s 3D configuration editor

Cogniteam’s 3D configuration editor

Cogniteam started with those examples in mind, as a way to break the cycle by providing tools to develop, package, deploy and manage cloud-connected robots. Our cloud-based platform uses containerized applications as software components. On our platform, these software components can be organized, connected, and reassembled by code, console interface, or from the web using GUI, making anyone (even without ROS-specific know-how) able to understand and see the various building blocks that compose the robot execution. The goal of deconstructing the mission to containerized blocks is also to untie the problematic coupling of OS and ROS versions by providing isolation and enabling the use of various ROS distributions on the same robot, including ROS1 and ROS2 components together.

Components can now be easily replaced making testing of alternative algorithms easier and robot access can be shared between operators and developers to allow remote access to the robot at any time. This does not require installing anything on the robot itself as all the installations are being managed by an agent running as a service on the robot. Multiple users can see live data or access the robot configuration and change it. Using the platform backbone is like having a whole DevOp team inside your team.

The robot configuration and tools for viewing and editing it are also important aspects of the platform. By building the robot model on the platform (configuring the robot sensors and drivers), you can keep track of driver versions, monitor the devices, generate TF’s (coordination transformation services to components), and auto-generate a simulation for your code, thus enabling you to change sensor location/type and simulatively test alternative scenarios – all without any coding. The platform also provides introspection, visualization, and coming soon will be analytic tools to ease the development of robots and bring on the robotic revolution.

Log in, start developing right now, and stay tuned for what is to come.

We have just started.