In dynamic scenarios, it is nearly impossible to anticipate and prepare for every conceivable situation. HRI allows robots to bridge this gap by enabling them to seek assistance from humans when faced with uncertainties or missing information, enhancing their problem-solving abilities. The ability of a robot to interact with humans in real-time fosters collaborative problem-solving and ensures a more adaptive response to the unpredictable nature of dynamic environments. However, achieving seamless and effective HRI is no small feat; it requires overcoming technological, psychological, and societal challenges. The pursuit of these ambitious goals in the realm of HRI highlights Cogniteam’s dedication to creating advanced robotic systems that can not only coexist but actively collaborate with humans to accomplish complex tasks.

In the vast landscape of HRI, various implementations have emerged, each holding the potential to enhance the way humans and robots communicate. However, the key to a transformative HRI experience lies not just in the availability of diverse components but in their seamless integration. Until recently, efforts to create a cohesive system for HRI, capable of completing the sense-think-act cycle of a robot while remaining extensible and modular for future innovations, were scarce.

Recognizing this crucial gap, the Israeli HRI consortium, comprised of leading robotics companies and esteemed university researchers in the field, is developing a global solution. At the forefront of this initiative, Cogniteam is leading the HRI toolkit group within the consortium. In the HRI Toolkit, all the products of the consortium are implemented and embedded in an extensive ROS2 graph, meticulously crafted to fulfill the sense-think-act cycle of robots through multiple layers of cognition.

Fig1: The multiple (main) Layers of Cognition in the HRI Toolkit as an infinite cycle of natural interaction with a human.

The “Basic Input” layer is responsible for acquiring speech and 3D skeleton models from the robot sensors. The onboard data stream from an array of microphones, a depth camera, and other sensors allows this layer to fuse directional speech with 3D skeletons. In addition, this layer keeps track of the 3D bodies as they roam near the robot.

Given this information, the “Interpreter” layer is responsible for interpreting different social cues. A unique algorithm learns and detects, on the fly, different visual gestures that can later be linked to direct robot reactions or interpretations about the person. An emotion detector is used to detect the facial expressions of the interacting person. These emotions can later be used for the reinforcement of specific robot gestures that receive implicit positive feedback. A text filtering mechanism enables the detection of vocal instructions, facts, and queries made by the person.

One of the most novel and interesting implementations of the HRI toolkit is the “Context Manager” layer, which is responsible for collecting the entire social information, including the current and past interactions, the social context of the environment, the state of the robot, and its tasks. This social information is provided by the person manager component, the ad-hoc scene detectors layer (e.g., intentional blockage detection), and additional information from the user code via an integration layer (e.g., map, robot state and tasks, detected objects, etc.). “Scene Understanding” is a key component that is responsible for processing this information and extracting useful social insights such as user patterns and availability. As such, this layer can truly benefit from the release of novel generative AI components such as image transformers, LLMs, etc.

The “Reasoning” layer checks whether the collected social information is sound and consistent. It does so with the help of novel Anomaly Detection Algorithms. Whenever a contradiction in the robot’s beliefs is detected, some information is flagged as missing, or some other anomalous event has occurred, this layer detects it and passes it onward – typically leading the robot to ask for help.

The “Social Planning” layer issues timed personalized, socially aware plans for interaction. This layer contains components such as “Social Reinforcement Learner” and “Autonomy Adjuster”, which adapt to specific users and social contexts; “Natural Action Selector”, which acts as the decision maker for issuing proactive and interactive instructions; “Scheduler”, which decides when to “interrupt” the user by applying different interruption management heuristics; “Social Navigator”, which adds a social layer on top of typical navigation algorithms such as Nav2; and other relevant components.

Finally, the “Natural Action Generator” layer applies these instructions in a manner that will be perceived as natural by humans, constantly adjusting different dynamic parameters and applying visual and vocal gestures according to an HRI-Code-Book. For instance, it may apply movement gestures that relay gratitude or happiness or play a message at a certain volume and pace that fits the given social context. Given these actions, if the human reacts naturally, these actions are again perceived by the “Basic Input” and thus the sense-think-act cycle continues.

Recently, NVIDIA released several generative AI tools that are very relevant for HRI. These include optimized tools and tutorials for deploying open-source large language models (LLMs), vision language models (VLMs), and vision transformers (ViTs) that combine vision AI and natural language processing to provide a comprehensive understanding of the scene. These tools are specifically relevant for the “Context Manager” layer and the “Scene Understanding” component of our HRI Toolkit. At the heart of this layer is the LLM, a pivotal component that significantly contributes to the nuanced understanding of social interactions.

In our exploration of this critical layer, we delve into the integration of NVIDIA’s dockerized LLM component, which is a part of NVIDIA’s suite of generative AI tools. Our experimental robot “Michelle” uses the NVIDIA Jetson AGX Orin developer kit with 8GB RAM. However, in order to run both the HRI toolkit and the LLM component on-site, we had to use the Jetson AGX Orin dev kit with 64GB RAM as an on-premises server that can run the LLM component and serve several robots at the same time using application programming interface calls. The HRI clients, e.g., Michelle, use cache optimizations to reduce server loads and response times.

Our decision to define the HRI Toolkit as a collection of ROS interfaces makes individual implementations interchangeable. Hence, it is easy to switch between edge-based, fog-based, or cloud-based implementations of the LLM component according to the specific requirements of the domain. This not only showcases the flexibility of the HRI Toolkit but also highlights the adaptability of NVIDIA’s dockerized LLM component. The computational power of the Jetson AGX Orin helped ensure that the LLM could seamlessly integrate into the broader architecture, augmenting the context management layer’s capabilities.

Our decision to define the HRI Toolkit as a collection of ROS interfaces makes individual implementations interchangeable. Hence, it is easy to switch between edge-based, fog-based, or cloud-based implementations of the LLM component according to the specific requirements of the domain. This not only showcases the flexibility of the HRI Toolkit but also highlights the adaptability of NVIDIA’s dockerized LLM component. The computational power of the Jetson AGX Orin helped ensure that the LLM could seamlessly integrate into the broader architecture, augmenting the context management layer’s capabilities.

The successful integration of an LLM into the HRI Toolkit not only addresses the challenges of social data processing but also sets the stage for future innovations. A collection of Cogniteam’s HRI components will be made available in May 2025.

To learn more about the HRI Toolkit for your application, reach us at https://cogniteam.com/talk-to-an-expert/.

As organizations increasingly rely on these robotic fleets, the need for effective monitoring and management becomes paramount. However, the unique requirements of robotic systems, in comparison to generic cloud-based monitoring solutions for IoT devices or computer networks, necessitate specialized tools. In this blog post, we will delve into these distinctive requirements, the compelling reasons to opt for tailored cloud-based monitoring solutions, and the specific challenges facing cloud-based monitoring for robotic systems.

Robots are not your typical data sources. They require specialized views that overlay sensory output, such as LiDAR and Point Cloud, over maps generated by Simultaneous Localization and Mapping (SLAM) processes. These views provide critical insights into a robot’s spatial awareness and surroundings, which are essential for safe and efficient operation. In addition to spatial data, robotic monitoring solutions must also display camera views, internal states, and other unique metrics like battery levels and motor temperatures. This multifaceted data presentation goes far beyond the generic charts offered by conventional cloud monitoring systems, which are designed for more straightforward data streams.

Robotic systems come with their own set of challenges that demand tailored monitoring solutions. These challenges include:

Robotic systems can be as diverse as the tasks they perform. Each robot may have distinct sensors, data formats, and interfaces for data extraction. A generic monitoring solution may lack the flexibility required to seamlessly integrate with the myriad data sources, making it imperative to have a tailored monitoring system that can adapt to the specific data extraction needs of each robot in your fleet.

Fig1: searching for ROS / Cogniteam Platform Streams to add to the Monitoring page of your robots in the Cogniteam Platform.

Data extraction and streaming should not interfere with a robot’s core tasks. Robots rely on their computational resources to navigate, manipulate objects, or perform complex functions. A tailored cloud-based monitoring system must be designed to minimize the computational overhead on the robot, ensuring data extraction and streaming have a negligible impact on its performance.

The demand for robotics in various industries is rapidly growing, leading to larger fleets of robots. Each robot might require multiple viewers to access monitored data simultaneously. To meet these scaling requirements, a tailored cloud-based monitoring system should be able to accommodate an increasing number of robots and viewers efficiently. It should ensure that all viewers can access the monitored data without significant delays.

Robotic systems increasingly rely on cloud-based decision-making processes and management systems. Therefore, the latency between data capture and its availability in the cloud is critical. A tailored monitoring system is designed to minimize latency, ensuring that the data is readily available for cloud-based decision-making processes. This low latency is crucial for real-time task execution and timely response to changing conditions.

The data monitored by robotic systems serves various stakeholders, not only human operators. Tailored views, as discussed earlier, are essential for providing insights into sensor data, internal states, and camera views. Furthermore, flexibility in layout customization is vital because each operator may have distinct preferences in how they wish to view the data. A tailored monitoring system can offer a range of layout options to accommodate individual operator needs.

Fig2: Selecting different types of viewers for the different robot streams in the Cogniteam Platform.

When considering monitoring solutions for a fleet of robots, it’s vital to recognize the unique demands and challenges that robotics presents. While generic cloud-based monitoring tools have their merits, they are ill-suited for the intricacies of robotic systems. Tailored solutions are purpose-built to address the specific needs of robots, offering specialized views, real-time data processing, edge computing capabilities, and peer-to-peer communication, among other advantages.

Investing in a dedicated robotic monitoring solution is not only a time-saving measure but also a strategic one that will lead to more efficient operations and greater reliability. The success of your robotic fleet hinges on your ability to adapt and leverage the right technology, making tailored cloud-based monitoring solutions a critical component of your robotic ecosystem. Such solutions empower organizations to optimize their robotic operations, enhance safety, and deliver exceptional value in an increasingly automated world.

Fig 3: a Configurable Monitoring Dashboard with dynamic layouts and presets in the Cogniteam Platform.

Cogniteam’s Cloud Platform: For organizations seeking a comprehensive and tailored solution for cloud-based monitoring in robotics, Cogniteam’s Cloud Platform stands out as an ideal choice. This specialized platform is designed to address the unique challenges discussed above, making it a perfect fit for managing robotic fleets. With Cogniteam, you get a monitoring system that seamlessly integrates with various data extraction methods, ensuring flexibility and adaptability to diverse robotic systems. It prioritizes non-interfering data streaming to minimize computational overhead on robots, ensuring that their core tasks remain unaffected. Scalability is at the core of the platform’s architecture, allowing it to efficiently accommodate growing fleets with multiple viewers, all without compromising on data accessibility and low latency. Moreover, Cogniteam’s Cloud Platform offers customized views and layouts, ensuring that the monitored data caters to the specific needs and preferences of individual operators. In essence, Cogniteam’s Cloud Platform is the tailored, all-in-one solution that successfully conquers the challenges of cloud-based monitoring for robotic systems.

Cloud-based teleoperation technologies for robotics have gained increasing importance due to their ability to bridge the gap between human operators and remote robotic systems, fulfilling various needs across industries.

However, implementing effective cloud-based teleoperation systems comes with several challenges. A human operator, or a cloud-based algorithm for that matter, must have a continuous, real-time stream of the robot’s cameras and other sensors to make split-second decisions that may be required. These streams may need to be shared across multiple clients in a scalable manner. The operator’s decisions must be delivered with minimal delays to the robot and in a reliable manner. Incoming and outgoing streams must be secure to some degree. On top of that, the robot may roam from one network to another and lead to varying degrees of signal strengths, loads, and latencies.

In cloud-based teleoperation, choosing a protocol is a critical consideration. While peer-to-peer protocols with end-to-end encryption are ideal for secure one-on-one communication, they face challenges in efficiently sharing a robot’s dynamic camera streams with multiple users in a scalable manner. Attempting to extend peer-to-peer solutions by opening multiple channels may strain the robot’s performance. Traditional video-chat protocols, which are typically optimized for somewhat static views (i.e., one person in front of a static background), are ill-suited for the dynamic nature of the camera streams of a mobile robot, making it essential to explore alternative protocols. The question then arises: which protocol can best meet our needs?

gRPC, short for Google Remote Procedure Call, has emerged as a powerful tool for building efficient, cross-platform, and language-agnostic APIs. Originally designed for intercommunication between microservices and may not be the immediate choice for a teleoperation protocol. WebRTC, on the other hand, was designed for real-time communication and comes to the forefront when the need for peer-to-peer interaction and low latency is in need.

There are many additional protocols out there. Some of which one would not consider immediately as an option for teleoperation, but still may hold some advantages over other prominent protocols in some cases. Let’s explore some of the main challenges and how we may choose to address them.

Data Integrity and Reliability: One of the standout features of gRPC is its reliance on TCP, a reliable transport protocol. This ensures that messages sent between the robot and operator are delivered in the order they were sent. In critical teleoperation scenarios, where precision and safety are paramount, this attribute becomes invaluable. The guaranteed delivery of instructions is particularly crucial when handling hazardous materials or situations where even minor errors can have significant consequences.

Security Measures: gRPC integrates seamlessly with Transport Layer Security (TLS), which provides strong encryption and authentication mechanisms. This ensures that sensitive data, including video feeds and control commands, are protected during transit. When the stakes are high, gRPC offers a reassuring level of security that meets the stringent requirements of applications like remote surgery or disaster response.

Peer-to-Peer Communication: In cloud-based teleoperation systems where the robot’s camera feeds must be instantly accessible to the operator for split-second decisions, WebRTC excels. Its architecture is inherently peer-to-peer, allowing for direct and swift data exchange between the robot and the operator, reducing latency, and providing real-time control.

UDP for Low Latency: WebRTC uses UDP, which, unlike TCP, does not impose strict ordering and reliability constraints. While this can result in occasional packet loss, it is beneficial in cases where minimizing latency is a priority. In situations where an operator needs to guide a robot through a remote location with unstable or varying network conditions, low-latency communication becomes critical.

The Unified Solution: Selecting Between different protocols ‘on the fly’

A cloud-based teleoperation system for robots is dynamic by nature, and no single protocol can cater to all scenarios and challenges. To achieve the highest level of adaptability, a well-engineered system should be capable of switching between different protocols (such as gRPC and WebRTC) based on immediate requirements.

Intelligent Decision-Making: An intelligent system can evaluate the current network conditions, the criticality of the task, and other relevant factors to determine the most suitable protocol. For instance, if the robot operates in a stable network environment with complex precision tasks, it might opt for gRPC. However, if the robot enters an area with a higher risk of latency and requires quick responses, the system can seamlessly transition to WebRTC.

Resilience to Network Changes: Cloud-based teleoperation systems need to account for the robot’s movements and potential shifts between networks, such as Wi-Fi to cellular. In such cases, the ability to dynamically switch between protocols can maintain the quality of service and allow the teleoperator to adapt to the changing circumstances seamlessly.

Support of several protocols in cloud-based teleoperation systems for robotics is crucial for addressing the diverse and ever-changing challenges of remote control. These protocols can offer distinct advantages that, when employed together and dynamically, can ensure optimal performance, safety, and adaptability in the most demanding environments.

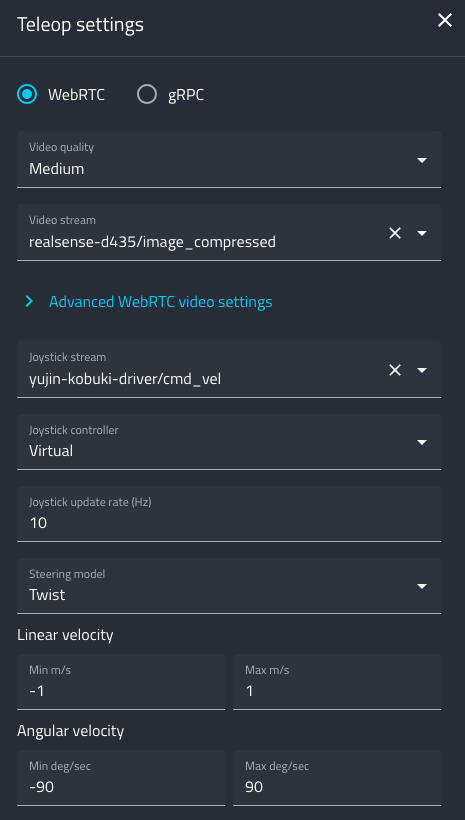

Fig 1: real-time teleoperation from anywhere in the world using Cogniteam’s platform.

Cogniteam’s Cloud Platform empowers users to craft their custom Teleoperation dashboards. This adaptable platform allows for the convenient assignment of buttons tailored to specific tasks, whether it’s triggering an emergency stop, initiating a return to the docking station, or activating custom code. Furthermore, the platform offers the versatility of employing various joysticks to manually control different robot actuators and manipulators. Users can confidently select between different protocols to align with their precise requirements, ensuring an optimal teleoperation experience.

Cogniteam’s Cloud Platform empowers users to craft their custom Teleoperation dashboards. This adaptable platform allows for the convenient assignment of buttons tailored to specific tasks, whether it’s triggering an emergency stop, initiating a return to the docking station, or activating custom code. Furthermore, the platform offers the versatility of employing various joysticks to manually control different robot actuators and manipulators. Users can confidently select between different protocols to align with their precise requirements, ensuring an optimal teleoperation experience.

Fig 2: teleoperation settings window – the user can choose on the fly between WebRTC and gRPC, and select the desired streams, their qualities, and other properties. Cogniteam’s Platform supports today the integration of the emerging protocols of the future.

Actually, we were early adopters of Player/Stage, the ROS predecessor. Back then, vendors were hesitant to join in the Robotic Operating System buzz. Generic protocols, software packages, and visualization tools were something that each company would have developed internally, again and again. Linux was considered good for the academy and hackers, and Windows was competing to get a foot into the robotic market with Windows Robotic Studio.

Back then, making a driver work would usually mean compiling your own Linux Kernel, reading through some obscure forums by the light of a candle, or as my lab professor would say “Here be dragons.” By the time you could see real image data streaming through your C++ code, your laptop graphic display driver would usually stop working due to incompatible dependencies and Ubuntu would crash on boot.

By now, more than a decade has passed. ROS has come into the picture, making data visualization, SLAM algorithms, and navigating robots something that anyone with some free time and a step-by-step tutorial can follow through, test, and customize. Robotic Sensor / Platform vendors themselves are now accepting ROS and releasing git repositories with ready-made ROS nodes — nodes that they themselves used to test and develop the hardware.

This gives the impression that the basic growing pains of robotic software development are now long gone. Buying off-the-shelf components, and building your own robot has never been easier, and that is before we even talk about simulation tools and the cloud.

Here is a weird fact, most of the robots created today are still closed boxes; the OS cannot be updated, and they are not ROS-based. iRobot, for example, discussed its intention in 2019 to move away from a proprietary operating system to a ROS-based one, (source) and is currently using ROS only for testing its infrastructure in AWS Robomaker (source). This is just one example. If you take a look at the robots around you, most of the issues they face will never be solvable and their behavior will not change greatly. Today’s ROS-based robots will be replaced by a whole new OS with a full-blown new ROS release in a new robot. But what happened to the old robot and the old code? Remember when phones behaved that way? Before FOTA (Firmware Over The Air). Before App stores. Before Android.

Here is another fun fact: every new robot out in the wild has a blog. There is a YouTuber out there uploading a review for it, and he is comparing it to all the other vendor’s models and algorithms. Why are the companies not doing that to start with? Whole setups are hard to break down and reassemble. Program flows are not transferable. We know, in 2012 we released some basic behavior tree decision-making code to ROS. It took until ROS2 to see a behavior engine first used as a ROS standard component. Have you ever tried to reconfigure move-base between robots, set up TF’s, and reconfigure thresholds for negative and positive obstacles when the sensor type or position changed? Updating its simulative model? Making sure its dependencies are met between the various ROS versions provided by the vendor?

Sounds like we are back to square one, doesn’t it?

Cogniteam’s 3D configuration editor

Cogniteam’s 3D configuration editor

Cogniteam started with those examples in mind, as a way to break the cycle by providing tools to develop, package, deploy and manage cloud-connected robots. Our cloud-based platform uses containerized applications as software components. On our platform, these software components can be organized, connected, and reassembled by code, console interface, or from the web using GUI, making anyone (even without ROS-specific know-how) able to understand and see the various building blocks that compose the robot execution. The goal of deconstructing the mission to containerized blocks is also to untie the problematic coupling of OS and ROS versions by providing isolation and enabling the use of various ROS distributions on the same robot, including ROS1 and ROS2 components together.

Components can now be easily replaced making testing of alternative algorithms easier and robot access can be shared between operators and developers to allow remote access to the robot at any time. This does not require installing anything on the robot itself as all the installations are being managed by an agent running as a service on the robot. Multiple users can see live data or access the robot configuration and change it. Using the platform backbone is like having a whole DevOp team inside your team.

The robot configuration and tools for viewing and editing it are also important aspects of the platform. By building the robot model on the platform (configuring the robot sensors and drivers), you can keep track of driver versions, monitor the devices, generate TF’s (coordination transformation services to components), and auto-generate a simulation for your code, thus enabling you to change sensor location/type and simulatively test alternative scenarios – all without any coding. The platform also provides introspection, visualization, and coming soon will be analytic tools to ease the development of robots and bring on the robotic revolution.

Log in, start developing right now, and stay tuned for what is to come.

We have just started.

A brilliant yet urgent idea that needs to be tested, verified, and implemented in the shortest time frame possible. Off-the-shelf components and ROS are great for that.

Grab a depth cam, strap on a lidar, secure it on a skateboard, control it with a Raspberry Pi and an Arduino, download a ROS-enabled mapping node, open Rviz, and there you have it.

Done. Your first demo (loud crowd cheers in the background).

In this setup – Hardware: Seeed Jetson Sub Kit, Velodyne Lidar, RealSense. Software: Cogniteam

Then a few months in, maybe a year, maybe more, somewhere along the way the direction blurs out. The intentions are clear. During early integration tests several operation modes needed to be managed so a simple state machine was implemented, recordings needed to be logged so one of the engineers built a tool for that, maybe the robot needed to be controlled remotely for a client demo, so image streaming became an issue, several robots might have had slightly different calibration parameters, configurations, so tools were built, scripts were written.

Minor things that are just slightly derailing from the original task, grew by just a bit.

Developing a platform takes time, and as your team grows personnel changes, standards become critical, tools are needed. That is when many systems become EOL (end of life), tools become obsolete and maintenance becomes an issue. These tasks are so time-consuming that they block progress, delay goals, and eventually result in an unstable product that is behind schedule.

This is where focus comes into the picture. To bring focus to a robotic company, we believe that two questions need to be answered, they are the same for both the hardware and the software aspects of robotics.

Many tools and frameworks are needed to develop robots and the more a company spends time and effort on its own IP, the better is the return on that money. In a competitive market, companies differ by excelling at their core innovative features. Do not try to rebuild every tool you need.

When deciding to develop an internal solution, or opting to use a simpler one, technical debt is induced. The technical ability of the company to develop that same solution at any given time (if the need arose), and the ability to integrate it within their software stack. A robotic company starts off with a prototype and 3 founders, but if successful will grow very quickly, and will need to be able to support deployment and production at large scales. All robotic companies will need to develop tools for remote access, updates, data profiling, permission management, and much more. Tools that are not needed when they are a small company with a prototype but are absolutely crucial for the company’s success eventually. Does every company need to re-develop its own tools for that?

By using a framework such as our platform, risks can be mitigated, enabling the companies to go on and develop their IP, while gaining the flexibility of a community-oriented and commercially supported framework that enables them to integrate cutting-edge services without the risk of getting stuck behind. Use ROS or any other architecture that enables you to quickly prototype and implement your ideas, but be sure to deploy, test, and package it using the state-of-the-art tools. Do not reinvent your own tools for that. Keep your development team focused on the things that differentiate your company from your competitors by focusing on how your robot excels at its task.

Focus is one of the key aspects of product development and this is Cogniteam’s goal in your development chain. To bring tools and order that will enable you to focus on your IP.

And this is your skateboard, with a 3D lidar and a Stereo camera :-)

In this exposition, I invite you to embark on a journey through the intricate landscape of robotics simulations, shedding light on the diverse types and their tailored applications. Our overarching objective? To enhance client performance in manufacturing, medical, logistics, retail, and other domains. As we traverse the evolution of robotics simulations, from their humble beginnings to today’s cutting-edge AI technologies, you’ll discover how each stage of a robotics project necessitates unique simulation features and capabilities.

This is part 1 of 4 in our series. Today we discuss the diverse stakeholders’ needs. Next, in parts 2 and 3 we’ll discuss 4 different stages of a robotic product and the relevant simulations for each one. Finally, in part 4 we’ll see how we at Cogniteam fit in with our simulation and summarize the main takeaways.

Part I – Diverse Stakeholder Needs

How would different stakeholders require specific features from robotics simulations? Let’s delve into those needs in more detail:

Machine Learning Training

Physical Design Testing

Efficiency for the Company

Complex Scenario Testing

Code Testing

Product Showcase

Tailored to showcase robot features to clients.

Demonstrates how the robot addresses client pain points.

Performance Optimization

Facilitates predictive maintenance and performance enhancement.

Back in 2014, the authors of the paper above asked roboticists what they needed from a robotics simulator?

Most things mentioned in this paper are relevant to this day. For instance, the survey participants said that their highest priority from a simulation is stability. Next are speed, precision, accuracy of contact, and the same interface between real & simulation systems. It was surprising to see that “visual rendering” came at number 8, but it was 2014. a lot has changed since then, namely the ability to render photo-realistic simulation to train vision-based ML algorithms.

We can also see what the participants answer about the most important criteria for choosing a simulator – highlighting the Gap between simulation and the real world. Open source is very important as well – not just to reduce costs, but also to have the support of the community. They want to run the same code when operating the robot or the simulation to further reduce the gap between the simulation and the real world. The simulation should also be light and fast enough so we can test every few changes to the code. As we can see, each stakeholder has a different view about the needs of a robotic simulation, and as you may expect – there’s no one-size-fits-all solution. Fortunately, these needs may come at different stages. In Part 2 we will start delving into the different stages and which type of simulation may be the right one for each.

This blog was brought to you by Builder Nation, the community of Hardware leaders developing world-changing products, and sponsored by ControlHub, the purchasing software for hardware companies.

The world has seen rapid technological advancements in the past few decades, particularly in robotics. The robotics industry has revolutionized how we live and work and has become an integral part of modern society. With the industry’s growth, it has become increasingly important to understand its impact and potential for the future.

Companies like Cogniteam are essential as they provide innovative and cutting-edge solutions that help businesses automate their operations, optimize their processes, and improve their overall performance.

Robotics Industry

The robotics industry has a long-standing history, dating back several decades to the introduction of the first industrial robot in the 1950s. However, in recent years, the industry has experienced remarkable growth. The market size is projected to soar to $149,866.4 million by 2030, with an impressive annual growth rate (CAGR) of 27.7%.

These programmable machines can perform tasks autonomously or with minimal human intervention. They have transformed manufacturing, healthcare, and agriculture industries, making processes more efficient and cost-effective.

Robotics has also allowed for advancements in space exploration and has been used in military and defense applications. Trends and improvements in the industry include the use of collaborative robots, artificial intelligence, or cobots, which work alongside humans to perform tasks, allowing for greater autonomy in robotic systems.

The expanding robotics industry has numerous applications across various fields. For instance, industrial robotics has transformed manufacturing processes, improving efficiency and precision in assembly lines. Collaborative robots, or cobots, have also become increasingly common, allowing robots to work alongside humans to perform tasks such as assembly, packaging, and inspection.

In the healthcare industry, robots are used for various applications, including surgical procedures, patient care, and rehabilitation. Telepresence robots have enabled doctors to communicate with patients remotely, improving access to healthcare.

Additionally, robotics in agriculture and farming has led to greater efficiency and precision in farming operations. Robots are used for planting, harvesting, and irrigation, while drones are used for crop monitoring and analysis.

The military and defense industry has also embraced robotics for various tasks such as surveillance, bomb disposal, and reconnaissance, performing dangerous tasks that would be too risky for humans.

Robots have been essential in exploring distant planets and celestial bodies in space exploration. They provide valuable data about them, designed to operate in low-gravity environments and withstand extreme temperatures and radiation.

Artificial intelligence (AI) advancements have been one of the most significant trends in industrial robotics. AI algorithms allow robots to learn and adapt to their environment, improving their performance and reducing errors.

Sectors

Cogniteam operates in various industries and sectors, providing tailored solutions for each.

Here are some of the sectors:

Agriculture: the agricultural solutions help farmers optimize their operations and improve their crop yields. Using advanced technologies such as machine learning and computer vision, farmers can be assisted in monitoring their crops, identifying potential issues, and taking corrective action before problems occur.

Delivery: these solutions allow businesses to optimize their delivery processes, improve their delivery times, and reduce their costs, to streamline their operations and offer faster and more efficient delivery services.

Real Estate: allows businesses to optimize their real estate operations, improve their efficiency, and reduce their costs. Cogniteam’s real estate solutions can help businesses to automate their processes, monitor their properties, and make data-driven decisions about their real estate portfolios.

A Look into the Future

Looking into the future, the robotics industry will continue transforming our lives. Cogniteam is at the forefront of this industry, providing innovative solutions for businesses to manage their robotic fleets easily.

As the industry evolves, we expect to see the continued growth of collaborative robots and significant advancements in healthcare and agriculture. Moreover, AI and machine learning, swarm robotics, and human-robot interaction are areas of focus for future research and development.

The future of the robotics industry is bright, and Cogniteam is leading the way with cutting-edge solutions.

Book a demo and start your way to the finish line.

Absolutely! In today’s world, the lifecycle of a robotic start–up company usually takes 6 years to get to market, while for a software company, it takes a year and a half or two. Robotic start-up companies start with a small prototype and will grow directly to production and sales. So they think…

Cogniteam’s Co-Founder and CEO, Dr. Yehuda Elmaliah was invited to speak at DAI 2021 virtual conference, Shanghai, China. The aim of the Distributed Artificial Intelligence (DAI) conference is bringing together researchers and practitioners in related areas to provide a single, high-profile, internationally renowned forum for research in the theory and practice of distributed AI, for more than 1,000 online participants.

Dr. Elmaliah was born in the ’70s and spent his childhood in the ’80s, having pop-culture movies and TV shows such as “Transformers” and “The Terminator” igniting his imagination, wondering what the future holds. 40 years later, expecting a robotic revolution, our world has come to a point where in today’s consumer robotics there are mainly vacuum cleaners. Not exactly the revolution I imagined…

Today is the “Golden Age” of single-mission robots. In the next 15-20 years, we will see more robots doing one thing, one task. From drone deliveries, and autonomous warehousing robots to cleaning and inspection robots. By 2025, 50K warehouses and logistics centers will be mostly autonomous and will use 4M robots. The industrial and service robot market will increase from 76.6 billion USD in 2020 to 176.8 billion USD in 2025. The robotics revolution starts now.

Building a robot is all about integration. Choosing the correct computing power; choosing the right sensors, the right actuators, not developing them.

The main time-consuming aspect of robot development is the software. By shifting to the cloud, robotic companies can benefit, improve, and save valuable time and money. It can reduce problems by utilizing data to gain knowledge, discover market trends, learn usage profiles and optimize robot components, and eventually increase business results.

Until today, companies had to develop their own tools for teleoperation, over-the-air (OTA’s) updates and upgrades, fleet management, monitoring, analytics, and remote debugging. Creating this cloud infrastructure is a real challenge, resulting in a longer time-to-market period for robotic companies.

Cogniteam’s cloud-based solution enables robotic companies to focus on their core IP development while integrating off-the-shelf software – because robotics is all about integration. Starting a robotic company with the correct robotic cloud-based infrastructure to begin with, makes all the difference! Expedite your time-to-market, enhance your capabilities, and outperform your competitors.

Book a demo and start your way to the finish line.

Well… you can! And I’m not just talking about running a simulated robot, I’m talking about turning your own laptop

into a “robot” and run cool stuff on it using our platform.

Here is a video tutorial on how to do it step by step:

Basically, you need to do 3 things:

When you add a new robot you’ll get an installation string you can just copy and paste to your Linux terminal and let the platform do all the rest.

When your “robot” is online click on it and go to -> Configuration

Congratulations, you’re at step B!

See how easy it is to build a configuration (01:32 in the tutorial) OR just go to the public configurations and use the one I made for you, look for the configuration: Try Nimbus On Your Laptop Configuration on Hub->Filter by Configurations -> Page 2 -> try_nimbus_on_your_laptop_configuration

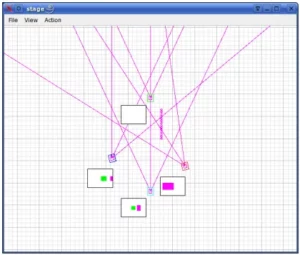

Let’s review the different components and their interactions (see the following figure).

The component in red is the webcam driver. It outputs a raw uncompressed image (image_raw). This output is the input of 3 different components. At the top, you’ll find the openvino-cpu-detection. This component wraps an algorithm that uses a Neural-Network to detect objects. It outputs an image where different objects are labeled with text and bounding boxes. This output is connected to the image-to-image-compressed component that, as you can imagine, compresses the image to reduce bandwidth.

Next is the hands-pose-detection component which outputs an image with hand skeleton recognition. Such components are useful for gesture detection which can communicate different instructions to a robot.

At the bottom, you’ll find the circle-detection component which outputs an image with the biggest circle detected in the image. Such components are useful for tracking items such as balls. You can easily write a behavior that tells the robot to follow a ball or to forage balls etc.

You are ready to have some fun!

Deploy the configuration. Wait for it to install – you can see the progress in the Overview tab of the robot’s dashboard. Now hit the Streams tab and view the different image streams.

So there you go. You’ve configured the robot, and as you can imagine you can tell the robot to view some gestures that tell it to follow you or to stop and maybe to follow a ball and so on…

Have fun!